Digital health is already an important Hot Topic, but it’s still a work in progress

Andrei Mihai

When Shwetak Patel proposed ‘Digital Health’ as a Hot Topic for the HLF, he had no idea a pandemic was coming. None of us did. But Patel rightly predicted that the importance of digital health was bound to explode. So in a presentation followed by two panel discussions, the advantages and pitfalls of digital health were thoroughly explored in this year’s Hot Topic.

“The current pandemic is taking us into a place where we’re gonna see how we define healthcare” said Patel, and it’s hard to disagree with him. There are few things that cut across every single layer of society in the way that the pandemic has done, and just one year ago, our current situation would have seemed inimaginable.

But while the pandemic caught most of the world off-guard, we were remarkably prepared for this pandemic in several ways. There are countless data dashboards and reports that anyone can access for free, there is a trove of research published every week, and technology has already helped us track cases and direct impactful interventions. Of course, it hasn’t always worked, but if we look at how things have unfolded thus far, it’s the political response that has often been underwhelming, not the research and technology.

“People say ‘we’re in a very unique pandemic’ — yes we are, but if you think about the research community, there’s a lot of things that we borrowed from here and there, and we were kind of ready for. Some of these things were already explored,” notes Patel.

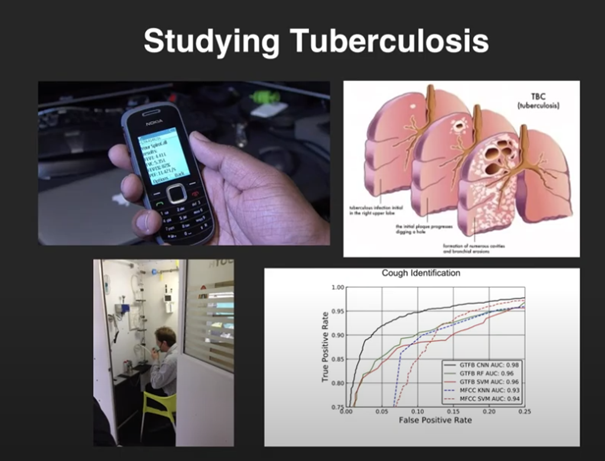

Much of Patel’s work has revolved around low-cost, easy-to-deploy sensing systems. For instance, smartphone sensors are increasingly being used in medical diagnosis, and Patel notes that the work done on the diagnosis of tuberculosis from smartphone-detected coughs can also be adapted to something like COVID-19.

Alas, digital health is no gravy train, and for every successful project, there’s a dozen that failed or even caused more problems than they solved, says Stefanie Friedhoff, a journalist specialized on global health.

“The field of global health is a large graveyard of projects and innovations that didn’t work,” she notes. Sometimes, it backfires spectacularly. “Just recently in Rwanda somebody tried to introduce a contact tracing app that asked for ethnicity, and with the genocide on everybody’s mind, not a good question to include.”

Friedhoff notes that some of the pressing problems in global health have been around since forever (like access to healthy nutrition and clean water), but others are relatively new and can be addressed through data and digital technology, like for instance the contact tracing approach that is so important not only in the pandemic, but also in other infectious diseases such as Ebola. But if all we do is implement systems from other places to developing countries, without adapting them by involving the local community and considering context, we may end up not only failing to address these needs, but widening the problems even further.

Ziad Obermeyer, Associate Professor of Health Policy at UC Berkeley, also points to another problem: algorithms and data can only do what we tell them to do, not what we want them to do.

“It’s unlikely that any of these ‘out of the box’ solutions will work, these aren’t things that we typically see when we look at accuracy. Algorithms can do the task we set them to do, but they can’t tell whether that’s the right task to do. So we’re seeing increasing bias in many fields.”

The problem of bias in algorithms and data comes up time and time again, but in global health, the problems are much more pressing than in other fields, because we’re dealing with people’s lives and health. In an ideal world, all we’d need to do is fix the bias in the algorithms, but as the panel points out, oftentimes the data is biased because our society is biased.

So what can be done? It’s still a work in progress, says Arunan Skandarajah, Program Officer at the Bill and Melinda Gates Foundation, but funders tend to be supportive of projects that address these biases; on a more practical level, if your bias affects something (like a skin tone) don’t use skin tone as one of the predictors of your model.

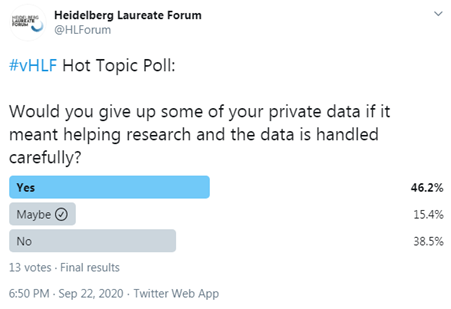

The second part of the panel drifted more onto the topic of data and privacy — which was bound to happen when you have Katherine Chou (Director of Research & Innovations at Google) and Aisha Walcott, a research scientist and manager at IBM Research Africa. It’s always a trade-off when it comes to medical data: on one hand, it’s some of the most sensitive data, but on the other hand, it’s also the data that can make a huge difference in terms of saving lives. After all, data is a big reason why we learned so much about COVID-19 in such a short period. But where and how this tradeoff should be made remains a contentious matter.

“We all make tradeoffs, I think people understand tradeoffs and they’re willing to give off some of their privacy when there’s something in return,” says Obermeyer, citing Google Maps as a good example: you tell Google Maps where you are, but on the flip side, you don’t need to carry a map with you anymore. But moderator Eva Wolfangel points out that Google has been at the center of several data scandals. Google’s community mobility data, for instance, offered valuable indications about the pandemic lockdown without sacrificing anyone’s individual privacy, but it left many people wondering just how much data Google has about us.

There are mechanisms which can alleviate this problem, Arunan points out. For instance, by averaging population data you can preserve anonymity while still gathering valuable information.

But while data privacy must absolutely be ensured, what about the life-saving algorithms that were never developed due to lack of data? Despite many valuable insights from the panelists, few answers can be clear cut about this data privacy issue, which will undoubtedly be caught in a tug of war game for decades to come.

For now, Patel suggests, maybe we can do more with the data we already have available. Crossovers are very useful, he notes. You don’t need to reinvent the wheel, there are already a lot of good data and ideas out there.

“Just an example from one of the crossover opportunities we have from TB (some of that was in collaboration with the Gates foundation). We could detect superspreaders. TB spreads roughly similarly to COVID-19, with aerosolized particles that can be infected” — so it could be a good opportunity to adapt some of the solutions we already have available.

Another aspect on which all panelists agreed on is the need for lasting, sustainable projects. Too often, projects get funding, they carry on for a few years, and then they stop, without any continuation whatsoever. That will just not do. If we want to truly transition towards a digital healthcare system, we need not only technical solutions and good values, but also continuity.

The post Digital health is already an important Hot Topic, but it’s still a work in progress originally appeared on the HLFF SciLogs blog.