Bits and Neurons: Is biological computation on its way?

Andrei Mihai

The field of artificial intelligence (AI) has grown tremendously in recent years. It’s already being deployed almost routinely in some industries, and it’s probably safe to say we’re seeing an AI revolution.

But artificial intelligence has remained, well, artificial. Can AI — or computers — actually be organic (as in, biological)?

Researchers have tried poking at the problem from different angles, from simply learning from biological processes to using biological structures as software (or even hardware) to inserting chips straight into the brain. All approaches come with their own advantages and challenges.

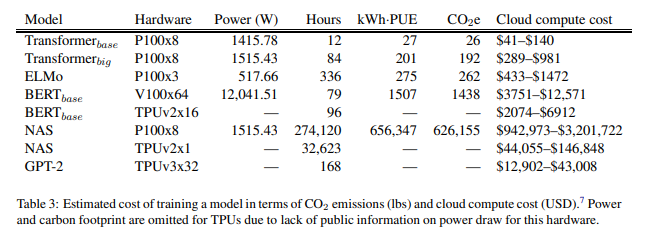

For starters, one reason to look for biological alternatives is energy. The way AI works nowadays uses a lot of energy. In a recent study, researchers at the University of Massachusetts, Amherst, found that while it takes AI models thousands of watts to train on a particular model, it would take natural intelligence just 20 watts. When you also factor in the energy costs of running a computer (which is often not based on renewable energy), it becomes even more problematic.

Here’s a breakdown of the costs and energy they calculated for various AI models:

“As a result, these models are costly to train and develop, both financially, due to the cost of hardware and electricity or cloud compute time, and environmentally, due to the carbon footprint required to fuel modern tensor processing hardware,” the researchers write.

Of course, oftentimes you don’t train one model on one dataset and that’s it — it often takes hundreds of runs, which further ramps up the energy expense. There is still some room for improvement in terms of AI efficiency, but the more complex the models become, the more energy they will likely use.

It should be said that in some fields, AI can already see patterns way beyond the current ability of the human brain, and can be developed further for years to come — but with all this used energy, the biological brain still seems to have a big edge in terms of efficiency.

After all, an AI is still only suitable for a very narrow task, whereas humans and other animals navigate myriad situations.

Organic software, organic hardware

It’s not just the software part — using biology as hardware has also picked up steam.

Various approaches are being researched. So-called “wetware computers”, which are essentially computers made of organic matter are still largely conceptual, but there have been some prototypes that show promise.

The birth of the field took place around 1999, with the work of William Ditto at the Georgia Institute of Technology. He constructed a simple neurocomputer capable of addition using leech neurons, which were chosen because of their large size.

It’s not easy to manipulate currents inside the electrons and harness them for calculation, but it worked — although the end result was a proof of concept more than anything else. For all their power and efficient use of energy, the signals within neurons often appeared chaotic and hard to control. Ditto said he believes that by increasing the number of neurons, the chaotic signals would self-organize into a structure pattern (like in living creatures), but this is a theory more than a proven fact.

Nevertheless, Ditto’s research virtually pioneered a new field, bringing it from the realm of sci-fi into reality. However, although our technological ability has improved dramatically since 1999, our understanding of the underlying biology has progressed slower. Daniel Dennett, a professor at Tufts University in Massachusetts, discussed the importance of distinguishing between the hardware and the software components of a normal computer versus what’s going on in an organic computer. “The mind is not a program running on the hardware of the brain,” Dennett famously wrote, arguing that some level of progress in cognitive science is necessary to truly make progress with this approach.

Meanwhile, approaches using other biological components have been making steady progress. A team from UC Davis and Harvard demonstrated a DNA computer that could run 21 different programs such as sorting, copying, and recognizing palindromes.

It wasn’t the first DNA computer. In 2002, J. Macdonald, D. Stefanovic and M. Stojanovic created a DNA computer capable of playing tic-tac-toe against a human player, and even before that, in 1994, researchers working in Germany designed a DNA computer that solved the Chess knight’s tour problem.

Increasingly, research is hinting at low-energy, customizable DNA computers, which offer an organic, brain-like learning system. MIT researchers have also pioneered a cell-based computer that can react to stimuli.

“You can build very complex computing systems if you integrate the element of memory together with computation,” said Timothy Lu, an associate professor of electrical engineering and computer science and of biological engineering at MIT’s Research Laboratory of Electronics, at the time.

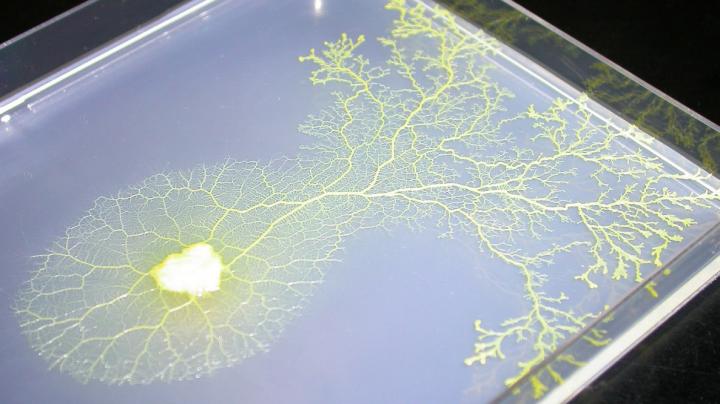

Meanwhile, a group of researchers in Japan have taken a different path: they developed an analog, amoeba-based computer that offers efficient solutions to something called ‘The Traveling Salesman Problem’ — which is very difficult for regular computers.

The Traveling Salesman problem is deceptively complicated: it asks the solver a seemingly simple question. You’re visiting a number of different cities to sell your products (each city just once) and must then return home. Given all the distances, what’s the shortest possible route you can take?

If you try to compute it (solve all the possible combinations), it becomes immensely difficult (although some algorithms and heuristics do exist). Amoebas placed in a system with 64 ‘cities’ (areas with nutrients that the amoeba wanted to get to) were able to solve the problem remarkably fast. They were still a bit slower than the very fastest machines, but they were close — and if our best, energy-intensive machines can barely beat a simple amoeba, maybe it’s an avenue worth researching more.

The other way around

Using biological structures for computation is one approach. Another is merging biology with chips, using the latter to augment the former. In this field too, things have advanced at a quick pace.

For instance, in 2020, Elon Musk made headlines (as he so often does), unveiling a pig called Gertrude that had a coin-sized chip implanted into her brain.

“It’s kind of like a Fitbit in your skull with tiny wires,” the billionaire entrepreneur said on a webcast presenting the achievement. Earlier this year, his start-up presented something even more remarkable: a monkey with a brain chip that allowed it to play computer games remotely.

The monkey first played computer games normally, with a joystick. The chip recorded the brain activity when an action was performed, and it wasn’t long before the monkey was able to play the game directly with brain signals and no actual touching.

Musk is applying for human trials next year, and several other researchers are also looking at different ways injectable chips may be used in humans.

It’s still early days, but more and more, we’re seeing ways through which organic matter and computers interact directly. There are different approaches, all with their advantages and drawbacks, and while we’re not looking at organic robots or AI just yet, but with the way things are progressing, those don’t seem nearly as far-fetched as a mere decade ago.

Intertwining the biological with the digital offers distinct advantages, from energy efficiency to optimized computation, but at the same time, this is not an easy challenge — and not a pursuit free of concern. When the natural and artificial worlds merge (as in the case of brain chipping, for example) the waters can quickly become murky.

The implications and ethics of the field should be considered carefully. Too often, these implications come as little more than an afterthought. The iconic quote from Jurassic Park pops to mind — and it’s perhaps something the Elon Musks of the world should also think about:

Ian Malcolm [Jeff Goldblum]: Yeah, yeah, but your scientists were so preoccupied with whether or not they could, that they didn’t stop to think if they should.

The post Bits and Neurons: Is biological computation on its way? originally appeared on the HLFF SciLogs blog.