Artists Are Using Poisoned Pixel Cloaks to Protect Their Art From AI

Andrei Mihai

Artificial intelligence is quickly becoming the defining technology of our time, but the way some AI tools are built remains deeply controversial. In the world of image-generating AI, algorithms often work on the backs of billions of scraped images, sometimes many made by artists who never gave their consent. For artists, who spend years or decades perfecting their craft and creating their own distinctive style, AI can feel like a direct competitor; or even worse, a thief.

There is not much recourse available for artists trying to protect their work. But a new tactic is gaining ground in digital art circles: poisoning the very data that feeds these AI systems. Two tools in particular, Nightshade and Glaze, are emerging as a kind of algorithmic resistance. Developed at the University of Chicago, these adversarial machine learning tools are designed to disrupt how generative models learn from images.

A War of Pixels and Principles

The idea of “fighting back” against AI using adversarial data is not new. In 2017, a student-run group at MIT illustrated how AI algorithms can be “tricked” into misidentifying objects. Using the now-famous cat/guacamole example, they showed how an adversarial attack changing only a few pixels can trick an AI into thinking a cat is guacamole (or vice versa). Turing Laureate Adi Shamir also presented his own work in the field of adversarial attacks at the 10th Heidelberg Laureate Forum in 2023.

Of course, most people are not trained in adversarial machine learning. This is why the emergence of simple-to-use tools has become so popular.

Glaze, first on the scene, operates defensively. It essentially acts as a cloak, subtly modifying an image’s pixels so that, while the changes are imperceptible to human viewers, AI models perceive it as having a different style. This technique, known as adversarial perturbation, confuses AI models attempting to learn and replicate the artist’s unique style. “Glaze applies a ‘style cloak,’” the researchers explain, “effectively obscuring the underlying patterns and characteristics that define an artist’s distinctive visual language.”

“The cloaks needed to prevent AI from stealing the style are different for each image. Our cloaking tool, run locally on your computer, will “calculate” the cloak needed given the original image and the target style (e.g. Van Gogh) you specify,” the program’s authors write.

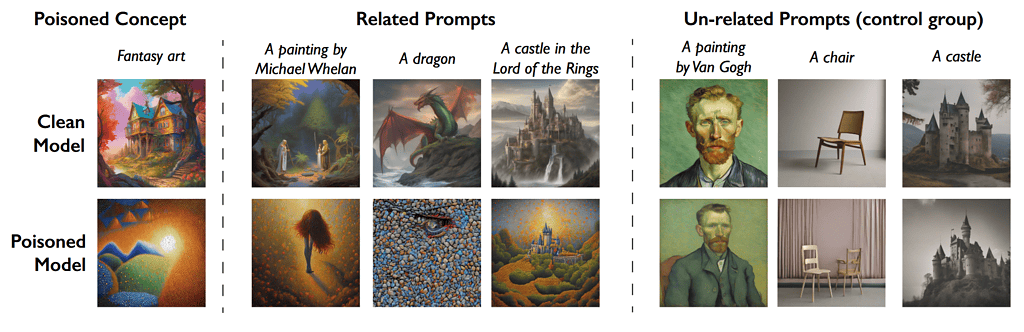

Nightshade, its more aggressive sibling, plays offense. It does not just hide style, it “poisons” content. It is doing a more complex version of the cat/guacamole attack, making subtle alterations to the image that would not be apparent to the human eye.

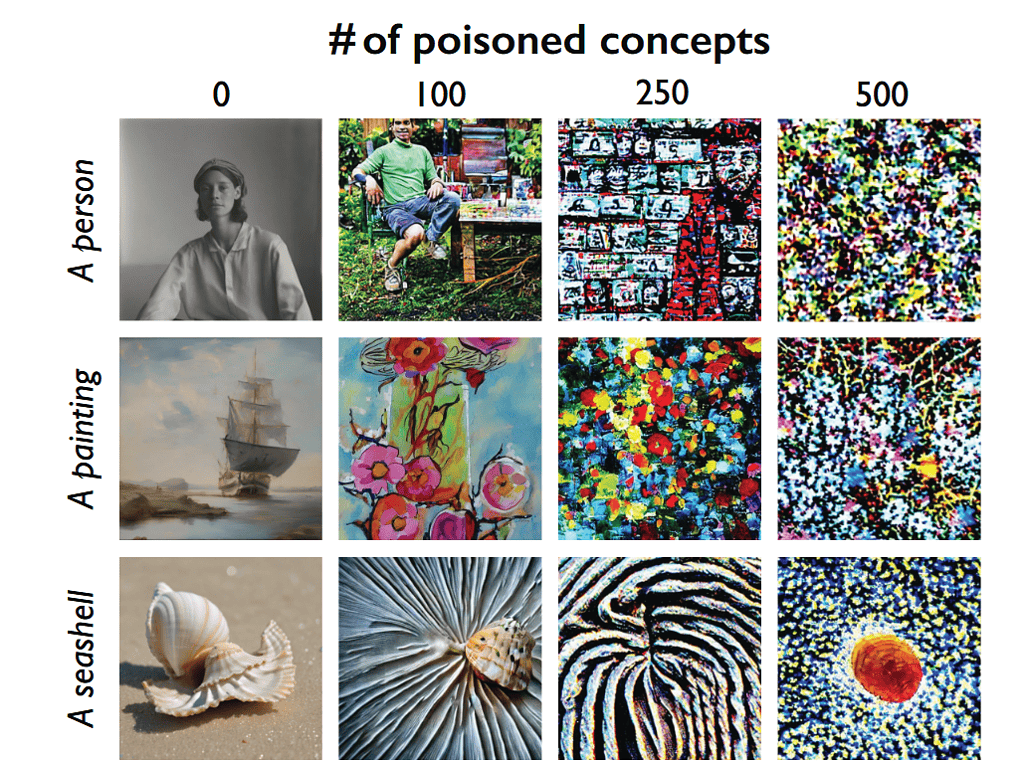

It uses a technique called multi-objective optimization which adjusts the image’s pixels slightly, so that when a diffusion model (like Stable Diffusion) encodes the image during training, the internal representation aligns with that of a completely different label. For instance, a poisoned image of a flower might have its internal representation shifted so that the model thinks it’s learning about a car. The poisoned image is paired with its original (correct) label in the dataset (e.g., “flower”), but the image’s internal features now resemble something else (e.g., “car”). This creates a mismatch between visual features and text prompts in the training set. When enough of these poisoned examples are included in training, the model builds inaccurate associations, leading to distorted, nonsensical outputs.

This “poison” can also spread, and Nightshade has been shown to produce effects that transfer across similar models. Poisoning one diffusion model can also corrupt related models, because they often share architecture or data sources. It essentially causes a “bleed-through” effect: Poisoning one prompt (e.g., “dog”) can corrupt related prompts (like “puppy” or “golden retriever”) due to semantic overlap in the AI’s language-image mapping.

The aim is to make training data unreliable enough that companies are forced to rethink their methods (or pay for clean data). Even if poisoning doesn’t break models outright, it increases the training cost, and that alone could shift the economics of AI development.

“Nightshade works similarly as Glaze, but instead of a defense against style mimicry, it is designed as an offense tool to distort feature representations inside generative AI image models. Like Glaze, Nightshade is computed as a multi-objective optimization that minimizes visible changes to the original image. While human eyes see a shaded image that is largely unchanged from the original, the AI model sees a dramatically different composition in the image,” write the creators of Nightshade.

By injecting a sufficient number of these “poisoned” images into the training dataset, artists aim to increase the computational and resource costs for AI developers to train their models on unlicensed data. If AI is trained with poisoned data, the resulting models may exhibit unpredictable and nonsensical outputs. The creators of Nightshade continue:

“For content owners and creators, few tools can prevent their content from being fed into a generative AI model against their will. Opt-out lists have been disregarded by model trainers in the past, and can be easily ignored with zero consequences. They are unverifiable and unenforceable, and those who violate opt-out lists and do-not-scrape directives can not be identified with high confidence.”

A New Arms Race?

Both Glaze and Nightshade are engineered to be robust and resilient to common transformations like cropping, compression, or resampling. But this battle is far from one-sided.

AI developers are already exploring countermeasures. These include cleaning datasets with filters, or using anomaly detection tools. The most common algorithms seem unable to fight through Nightshade, but an arms race is likely underway and non-public tools may have better capability.

From the artists’ perspective, data poisoning can be viewed as a form of digital self-defense, a necessary response to the widespread practice of AI companies training their models on copyrighted artwork without consent or compensation. It is an asymmetrical fight. Artists, often working independently and without institutional support, are taking on some of the world’s most powerful AI companies. But unlike lawsuits or protest letters, poisoning affects the one thing those companies depend on most: data.

Poisoning challenges the assumption that data is neutral and abundant. It forces a reckoning with the source of much of AI’s data: the unpaid, uncredited labor of millions of creators.

However, this could result in an escalating arms race, with unpredictable outcomes for the overall security and reliability of AI systems. Some critics argue that intentionally corrupting training data could have unintended negative consequences for the overall quality and reliability of AI models, potentially impacting various sectors that rely on these technologies.

Technology Shifts Faster than Society

It is unclear whether data poisoning will become common or whether it is even scalable. Equally uncertain is how artists’ careers will ultimately be impacted by these developments in the long run. Yet at its core, the problem is a familiar one. Technology developed faster than we could decide – as a society – how to use it.

There is also a darker side to all this. Poisoning techniques, while currently aimed at protecting artwork, could in theory be used maliciously. What began as a defense against artistic theft could, in the wrong hands, be used to corrupt datasets in critical areas like healthcare or finance, where AI failure could have real-world consequences. Poisoned data could bias medical diagnostics or misinform autonomous vehicles, In a world increasingly reliant on machine learning, that is another issue flying under the radar.

We tend to think of data as neutral, dispassionate, and “clean.” But it is nothing of the sort. It is shaped by power and sometimes extracted without proper consent. Now, data is being subtly contested in ways that only machines can perceive. With every poisoned pixel, artists are making the claim that the raw material of artificial intelligence stems from human labor which is creative, emotional, and fallible, but very much human.

This fight is about more than art, it is about what kind of intelligence we are building, and how far we are able to go to achieve it. Tools like Nightshade are still very targeted and niche. But we are still in the early days of the technology, and who knows how this fight will progress? After all, who could have guessed this would even be a point of discussion a few years ago?

The post Artists Are Using Poisoned Pixel Cloaks to Protect Their Art From AI originally appeared on the HLFF SciLogs blog.